- Blog

- Published on: 03.05.2021

- 6:06 mins

Artificial Intelligence and Ethics

A Paper Tiger Waiting to Be Set Free | MHP – A Porsche Company

Back in 2016, tech giants Amazon, DeepMind, Facebook, Google, IBM and Microsoft came together to form the “Partnership on AI.” Although many have speculated about the motives behind this advantageous partnership, it has drawn a considerable amount of attention to the topic of ethics and artificial intelligence since it was founded. By 2019, an NGO called AlgorithmWatch had listed 160 guidelines and recommendations for this topic in an inventory. No doubt they are ready to add several more by now. The organizations that issue such guidelines are as diverse as the motives and perspectives that they bring to the discussion: government ministries and NGOs, research institutes, business associations and companies are all working on this topic. The quality and scope of their recommendations vary significantly, with succinct one-pagers and documents with hundreds of pages at each end of the spectrum. For example, the “Ethically Aligned Design” guide issued by the Institute of Electrical and Electronics Engineers (IEEE) is almost 300 pages long.

Clearly much has been said on this topic so far. It is certainly attracting a lot of interest and attention and there are now plenty of good recommendations out there. However, there is still a distinct lack of anything more than recommendations or self-imposed business ideals. According to AlgorithmWatch, only 8 of the 160 documents listed in its inventory are binding. A quick glance through these documents is enough to determine that even this low number is a rather optimistic statement. Only four or five of the papers set out a genuinely binding obligation in the sense that the guidelines are applied in practice and therefore result in corresponding governance structures, audit processes or similar actions. As is so often the case in a business context, there is certainly no shortage of knowledge on this topic. It is the implementation of this knowledge that is really the problem. An unwillingness to tackle the issue, naivety and poor execution skills all play their part here.

Establishing Ethics as a Force for Good

Given all this, it seems we need to rethink our perception of ethics, moving away from its “preachy” and obstructive image to the idea that ethics can be a force for good. To succeed, the various reservations that people have about AI must first be taken seriously and then countered with information, transparency and training. It is our “ethical” responsibility to clarify the potential of AI and then use it consistently. For example, artificial intelligence offers more opportunities than virtually any other tool to help us achieve the UN’s 17 Sustainable Development Goals or influence the lives of the “Fridays for Future” generation.

Prioritizing information and transparency means presenting the benefits as well as identifying genuine risks and establishing effective countermeasures to protect individuals, groups or even entire sections of society.

Identifying and Eliminating AI Risks

Social scoring, potentially in conjunction with facial recognition, is – at the moment – unthinkable for most democracies but it has been a reality in China for some time now. Although less drastic at first glance, the manipulative influence of social media is a much more common feature in people’s lives across the globe. Algorithms determine what is shown to us, what we therefore consider to be reality, what we believe and hope, love and hate, and, of course, what we buy. These algorithms present distortions of reality and meaningful relations between things that do not logically intersect. This is constructivism in the same format as Plato’s allegory of the cave. Once propaganda bots start influencing our political decision-making and our electoral votes, AI becomes a serious threat to democratic systems.

The use of artificial intelligence can also have far-reaching and very tangible consequences for individuals, too. For example, an AI-controlled job application process can prematurely terminate an application if an existing bias in the algorithm or data leads to a discriminatory selection. Researchers have already uncovered examples of how AI recruitment systems tend to favor white men. Even if this hurdle can be overcome, the next challenge could be the use of voice analysis software that can determine the mental state or sexual orientation of applicants during interviews.

Even AI-based predictive policing in the style of sci-fi film “Minority Report” has been in use for a long time but there are numerous examples of bias-related discrimination against specific minority groups. Developers are often quite surprised by their own creations. This was the case for a team of Facebook employees who allowed two chat bots to train each other. The experiment had to be stopped because the bots concluded that English was not very effective and they rapidly invented their own language that the developers could not understand. There are endless examples of such challenges so there is certainly no lack of knowledge about the problems that need tackling. The determination to implement solutions is the thing that is lacking.

“Trustworthy,” “Beneficial” and “Binding”

To sidestep these issues, we build three anchors into every AI ethics project: “trustworthy,” “beneficial,” and “binding.” While the first two are now seen as the cornerstones of good practice for many initiatives, establishing the “binding” element is still the difficult part. Explicitly taking this anchor into account is what differentiates our universal approach from others.

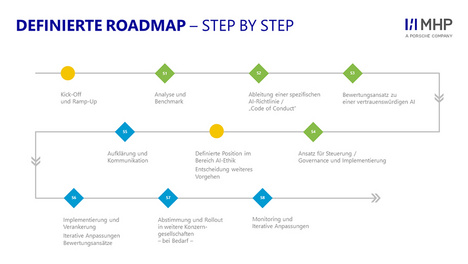

The graphic below illustrates our basic approach for all AI ethics projects:

Following a tailor-made analysis of the necessary attributes, we define things like the roles and governance structures required for the subsequent operational process

We then help to implement the project using specific change-management measures. We also assign specific criteria to the defined attributes and the degree to which these criteria are fulfilled is evaluated via a tools-based testing process during each AI project.

For example, the “trustworthy” anchor requires attributes such as “fairness” because fair always means non-discriminatory among others. The first step of the testing process is to evaluate the risk of the relevant AI application. For example, discrimination by an adaptive system in a production environment is not especially risky compared to the effect discrimination will have on a job application process. The criteria are therefore weighted in a broader sense based on their risk classification. Discrimination generally takes the form of bias so it is crucial to be aware of this risk and to actively prevent it. To achieve this, the relevant criteria are examined as follows: Was the development team diverse enough to represent different perspectives? Was the potential for bias taken into account during development of the models and algorithms? Has the source of the historical data been verified and were steps taken to ensure that it does not represent a biased view? Are any tests being run during ongoing operation to detect model drift and data drift? Are the applicable governance structures able to quickly identify and resolve problems? We document the corresponding answers and define appropriate measures as required.

This approach allows us to operationalize the final “binding” anchor. In contrast to virtually all of the published guidelines, we describe WHAT to do as well as addressing HOW it should be done.

And yet it moves

While we were writing the last few lines of this article, we got the news that the EU is now going to enact a binding set of rules. In doing so, it is consistently following its line of placing digitization under the maxim of "trust" and thus clearly distinguishing itself from the digital giants USA and China. A good strategy. Even though there will certainly be no shortage of well-intentioned advice on what could have been done better, this is a very good and important step with an exemplary function. In the next blog post, we will take a closer look at the EU regulations and the resulting consequences. It is already clear that a violation can be expensive.

Conclusion

Artificial Intelligence has enormous potential, particularly when it comes to accelerating sustainable development. To tackle the reservations that people have about AI, it is important to actively manage its trade-offs. Doing so requires practical approaches that go further than simply publishing guidelines – such approaches must create a binding framework and thus inspire trust. The paper tiger that is AI ethics is ready to be set free. When it finally is, we can be ready for AI to unleash its full potential to the advantage of companies and their stakeholders, society and the environment. The EU seems to have understood this.